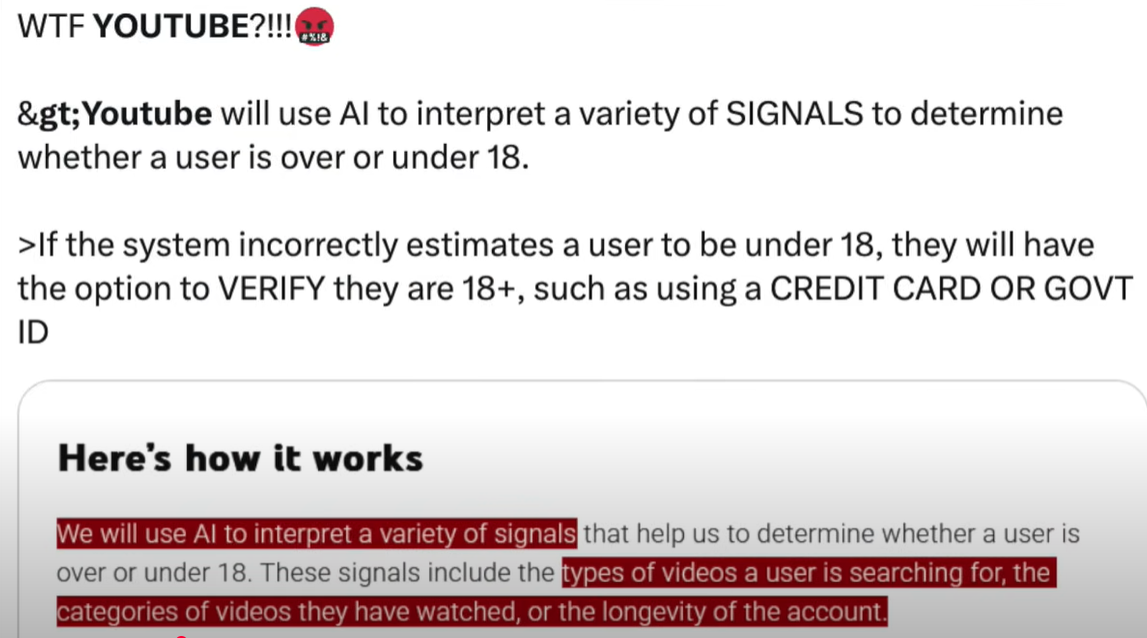

In a move that raises significant concerns over privacy and digital freedom, YouTube has begun implementing an AI-powered system to estimate users’ ages based on their online behavior rather than their declared birth dates. This shift, which comes on the heels of the controversial Online Safety Act in the United Kingdom, has ignited a fierce debate about the implications of surveillance technology in the digital age.

Previously, YouTube relied on the birth date users provided during account creation to determine their eligibility for age-specific protections. However, the new system phases out this method, opting instead for machine learning algorithms that analyze users’ browsing habits and interaction styles to assess whether they are likely under 18. If flagged, users face automatic restrictions, and if they dispute the AI’s assessment, they must verify their age through government-issued IDs, facial selfies, or credit card authentication.

Critics argue that this behavioral surveillance is deeply invasive and sets a dangerous precedent. The transition from a trust-based system to one rooted in suspicion fundamentally alters the relationship between users and digital platforms. Digital rights advocates warn that this approach may limit access to educational content and vital discussions, effectively silencing those who choose not to submit personal information for verification.

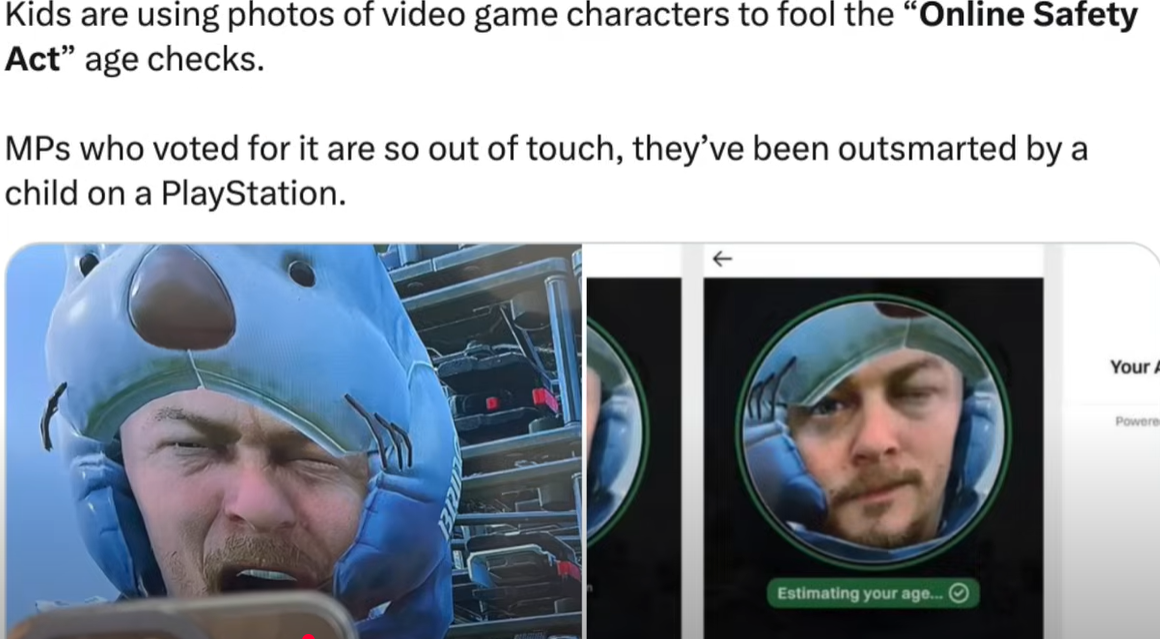

The timing of this rollout in the United States is particularly troubling, as it coincides with growing public sensitivity to digital control. The Online Safety Act in the UK was initially designed to protect citizens, especially young people, from harmful online content. However, it has faced backlash for overreach and enabling corporate and governmental control over personal data. Observers note that what began as a quest for safety is increasingly perceived as a method of control that undermines user autonomy and privacy.

The implications of YouTube’s new AI system extend beyond individual users. It raises critical questions about who controls access to information and what rights users retain when algorithms dictate their online experiences. As the digital landscape becomes more regulated, the foundational purpose of the internet as a space for exploration and free exchange is at risk. The psychological impact on young users, who must prove their identity to be trusted, is also concerning. This creates a narrative where privacy is sacrificed for security, a lesson many believe is not only flawed but harmful.

Internationally, the trend towards invasive surveillance is alarming. Despite resistance in the UK, other countries are considering similar policies, prompting fears of a global shift towards restricted online freedom. The conversation must center on finding ways to protect users without resorting to invasive surveillance or identity verification systems. Alternatives such as content filters, parental controls, and educational initiatives could provide safer online environments without compromising privacy.

As technology continues to advance, the need for transparency, accountability, and user rights becomes more pressing. The introduction of AI-driven moderation tools must be accompanied by clear guidelines and user consent, ensuring that individuals are treated as more than mere data points. The balance between innovation and ethics, safety and freedom, is delicate and must be navigated with care.

In this critical moment, the public’s demand for clarity and control over their digital experiences is paramount. The time for debate and questioning is now, before the open internet becomes a relic of the past. As we stand on the brink of this new era in digital interaction, the choices made today will shape the future of online freedom and autonomy for generations to come.